Fetch metadata from the PDC API

This tutorial provides an example of how to fetch metadata for data imported from the PDC. Learn how to import data from the PDC.

Introduction

This short tutorial will show how you can use Data Cruncher and the PDC API to get the desired metadata for proteomic data imported from the PDC. Data Cruncher is an interactive environment within the CGC that allows you perform further analyses of your data using JupyterLab or RStudio. The PDC API will retrieve the most up to date metadata for the files in your CGC projects. We will also show how you can find the corresponding genomic data from the TCGA GRCh38 dataset for the proteomic data from the PDC and how you can import the data into a project on the CGC.

Some NCI data are under an EMBARGO for publication and/or citation. For more details, visit the NCI Proteomic Data Commons for the study of interest.”

The use case shown in the tutorial is for demonstration purposes, you can customize the code to retrieve metadata of you choice.

Prerequisites

- An active account on the CGC.

Steps

- Create a project on the CGC.

- Use Data Cruncher to retrieve metadata from the PDC API.

- Create iTRAQ4 to Case Submitter ID mapping for RMS files

- Query the TCGA GRCh38 dataset using the CGC Datasets API

1. Create a project on the CGC

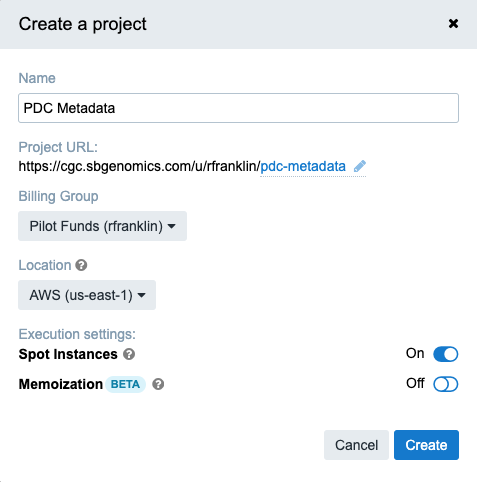

- On the CGC home page, click Projects.

- In the bottom-right corner of the dropdown menu click+ Create a project. Project creation dialog opens.

- Name the project PDC Metadata.

- Keep the predefined values for other settings. If you want to learn more about project settings, see more details.

- Click Create. The project is now created and you are taken to the project dashboard.

The next step is to create a Data Cruncher analysis and use it to retrieve PDC metadata.

2. Use Data Cruncher to extract metadata from the PDC API

We've created an example tutorial that will show you how to fetch metadata for an interactive analysis. This part of the tutorial incorporates and demonstrates a part of the code and instructions that are originally available in the PDC Clustergram example from PDC documentation.

-

In the PDC Metadata project, click the Interactive analysis tab on the right.

-

On the Data Cruncher card click Open. You are taken to the Data Cruncher.

-

In the top-right corner, click Create new analysis. If you don't have any previous analyses, you will see the Create your first analysis button in the center of the screen.

-

In the Analysis name field, enter PDC Metadata.

-

Select JupyterLab as the analysis environment.

-

Click Next.

-

Keep the predefined values for Compute requirements and click Start the analysis. The CGC will start acquiring an adequate instance for your analysis, which may take a few minutes. Once the analysis is ready, you will be notified.

-

Run your analysis by clicking Open in editor in the top-right corner. JupyterLab opens.

-

In the Notebook section on the JupyterLab home screen, select Python 3. You can now start entering the analysis code in the cells. Please note that each time a block of code is entered, it needs to be executed by clicking

or by pressing Shift + Enter on the keyboard.

or by pressing Shift + Enter on the keyboard. -

Let's begin by importing the

requestsandjsonmodules in Python:

import requests

import json

- We will now add the function that will be used to query the PDC API:

def query_pdc(query):

URL = "https://pdc.cancer.gov/graphql"

# Send the POST graphql query

print('Sending query.')

pdc_response = requests.post(URL, json={'query': query})

# Set up a data structure for the query result

decoded = dict()

# Check the results

if pdc_response.ok:

# Decode the response

decoded = pdc_response.json()

else:

# Response not OK, see error

pdc_response.raise_for_status()

return decoded

- Let's create the query and use the function above to fetch all proteome case IDs from the CPTAC2 Retrospective project:

cases = []

for offset in range(0, 1000, 100):

cases_query = """{""" + """getPaginatedUICase(project_name:"CPTAC2 Retrospective",

analytical_fraction: "Proteome",

primary_site: "Breast",

disease_type: "Breast Invasive Carcinoma",

limit: 100, offset: {})""".format(offset) + """{

uiCases {

case_id

project_name

}

}

}"""

result = query_pdc(cases_query)

for case in result['data']['getPaginatedUICase']['uiCases']:

cases.append(case['case_id'])

print('\nNumber of cases:' + str(len(set(cases))))

This outputs query status information and the number of returned cases:

Sending query.

Sending query.

Sending query.

Sending query.

Sending query.

Sending query.

Sending query.

Sending query.

Sending query.

Sending query.

Number of cases:108

- We will now import the

pandaslibrary that will allow us to format and output data:

import pandas as pd

If the library is not available, install it using the pip command.

- Next, let's set up the Submitter ID parameter for the given study, which we will use to retrieve the corresponding set of metadata.

study_submitter_id = "S015-1" # S015-1 is TCGA_Breast_Cancer_Proteome

- Now we'll create a query for clinical metadata using the defined query parameters:

metadata_query = '''

{

clinicalMetadata(study_submitter_id: "''' + study_submitter_id + '''") {

aliquot_submitter_id

morphology

primary_diagnosis

tumor_grade

tumor_stage

}

}

'''

- Next, let's query the PDC API for the clinical metadata. The data is then converted to a pandas dataframe.

decoded = query_pdc(metadata_query)

matrix = decoded['data']['clinicalMetadata']

metadata = pd.DataFrame(matrix, columns=matrix[0]).set_index('aliquot_submitter_id')

print('Created a dataframe of these dimensions: {}'.format(metadata.shape))

- Finally, we'll print out the metadata fetched from the PDC and converted into a pandas dataframe:

print(metadata)

The output should be:

aliquot_submitter_id morphology primary_diagnosis \

TCGA-AO-A12B-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A12B-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A12D-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A12D-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A12D-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

... ... ...

TCGA-AO-A0JE-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A0JJ-01A-31-A21W-30 8520/3 Lobular carcinoma, NOS

TCGA-AO-A0JL-01A-41-A21W-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A0JM-01A-41-A21V-30 8500/3 Infiltrating duct carcinoma, NOS

TCGA-AO-A126-01A-22-A21W-30 8500/3 Infiltrating duct carcinoma, NOS

aliquot_submitter_id tumor_grade tumor_stage

TCGA-AO-A12B-01A-41-A21V-30 Not Reported stage iia

TCGA-AO-A12B-01A-41-A21V-30 Not Reported stage iia

TCGA-AO-A12D-01A-41-A21V-30 Not Reported stage iia

TCGA-AO-A12D-01A-41-A21V-30 Not Reported stage iia

TCGA-AO-A12D-01A-41-A21V-30 Not Reported stage iia

... ... ...

TCGA-AO-A0JE-01A-41-A21V-30 Not Reported stage iiia

TCGA-AO-A0JJ-01A-31-A21W-30 Not Reported stage iib

TCGA-AO-A0JL-01A-41-A21W-30 Not Reported stage iiia

TCGA-AO-A0JM-01A-41-A21V-30 Not Reported stage iib

TCGA-AO-A126-01A-22-A21W-30 Not Reported stage iia

[152 rows x 4 columns]

The output above has been truncated for brevity. All 152 rows will be displayed in your analysis.

3. Create iTRAQ4 to Case Submitter ID mapping for RMS files

The objective of this section of the tutorial is to demonstrate how to filter files (by type), fetch a file's metadata and use it to create iTRAQ4 to Case Submitter ID matches. In this case, we will be fetching data about Raw Mass Spectra (RMS) files using the same Study Submitter ID used during the course of this tutorial, S015-1.

- Create a query that will be used to fetch all data about RMS files from the study:

rms_query = """{filesPerStudy(study_submitter_id: "S015-1",

data_category:"Raw Mass Spectra") {

study_id

study_name

file_id

data_category

}}"""

- Execute the query:

rms = query_pdc(rms_query)

- Parse returned data:

### We now have all Raw Mass Spectra files for S015-1

rms_result = rms['data']['filesPerStudy']

# Show first 5 files

for file in rms_result[:5]:

print(file)

print('\n')

The returned output should be:

{'study_id': 'b8da9eeb-57b8-11e8-b07a-00a098d917f8', 'study_name': 'TCGA_Breast_Cancer_Proteome', 'file_id': '00064e82-5ceb-11e9-849f-005056921935', 'data_category': 'Raw Mass Spectra'}

{'study_id': 'b8da9eeb-57b8-11e8-b07a-00a098d917f8', 'study_name': 'TCGA_Breast_Cancer_Proteome', 'file_id': '0029431e-5cb0-11e9-849f-005056921935', 'data_category': 'Raw Mass Spectra'}

{'study_id': 'b8da9eeb-57b8-11e8-b07a-00a098d917f8', 'study_name': 'TCGA_Breast_Cancer_Proteome', 'file_id': '003914a0-5cd9-11e9-849f-005056921935', 'data_category': 'Raw Mass Spectra'}

{'study_id': 'b8da9eeb-57b8-11e8-b07a-00a098d917f8', 'study_name': 'TCGA_Breast_Cancer_Proteome', 'file_id': '00466270-5cef-11e9-849f-005056921935', 'data_category': 'Raw Mass Spectra'}

{'study_id': 'b8da9eeb-57b8-11e8-b07a-00a098d917f8', 'study_name': 'TCGA_Breast_Cancer_Proteome', 'file_id': '005db03e-5cdf-11e9-849f-005056921935', 'data_category': 'Raw Mass Spectra'}

- Select the first RMS file from the returned data:

# Pick first Raw Mass Spectra file from S015-1

file_id = rms_result[0]['file_id']

- Fetch metadata for the selected file ID:

file_query="""{""" + """fileMetadata(file_id: "{}")""".format(file_id) + """{

file_name

file_size

md5sum

file_location

file_submitter_id

fraction_number

experiment_type

data_category

file_type

file_format

plex_or_dataset_name

analyte

instrument

aliquots {

aliquot_id

aliquot_submitter_id

label

sample_id

sample_submitter_id

case_id

case_submitter_id

}

}

}"""

- Execute the metadata query:

result = query_pdc(file_query)

- Create a dictionary that contains the iTRAQ4 to Case Submitter ID mapping:

label_map = {}

aliquots = result['data']['fileMetadata'][0]['aliquots']

for aliquot in aliquots:

print(aliquot)

print("\n")

label = aliquot['label']

if aliquot['case_id']:

case_submitter_id = aliquot['case_submitter_id']

label_map[label] = case_submitter_id

else:

label_map[label] = 'Reference'

This code block returns the following output:

{'aliquot_id': '34317a3a-6429-11e8-bcf1-0a2705229b82', 'aliquot_submitter_id': 'TCGA-C8-A12V-01A-41-A21V-30', 'label': 'iTRAQ4 115', 'sample_id': '83929378-6420-11e8-bcf1-0a2705229b82', 'sample_submitter_id': 'TCGA-C8-A12V-01A', 'case_id': 'f49043f6-63d8-11e8-bcf1-0a2705229b82', 'case_submitter_id': 'TCGA-C8-A12V'}

{'aliquot_id': 'fd50c409-6428-11e8-bcf1-0a2705229b82', 'aliquot_submitter_id': 'TCGA-AO-A0JM-01A-41-A21V-30', 'label': 'iTRAQ4 114', 'sample_id': '35800901-6420-11e8-bcf1-0a2705229b82', 'sample_submitter_id': 'TCGA-AO-A0JM-01A', 'case_id': 'c10409cb-63d8-11e8-bcf1-0a2705229b82', 'case_submitter_id': 'TCGA-AO-A0JM'}

{'aliquot_id': '6a47a426-ec51-11e9-81b4-2a2ae2dbcce4', 'aliquot_submitter_id': 'Internal Reference', 'label': 'iTRAQ4 117', 'sample_id': '6a479058-ec51-11e9-81b4-2a2ae2dbcce4', 'sample_submitter_id': 'Internal Reference', 'case_id': '6a477ef6-ec51-11e9-81b4-2a2ae2dbcce4', 'case_submitter_id': 'Internal Reference'}

{'aliquot_id': '6dbd35fe-6428-11e8-bcf1-0a2705229b82', 'aliquot_submitter_id': 'TCGA-A8-A08G-01A-13-A21W-30', 'label': 'iTRAQ4 116', 'sample_id': '78f43d0f-641f-11e8-bcf1-0a2705229b82', 'sample_submitter_id': 'TCGA-A8-A08G-01A', 'case_id': '3bdfde9b-63d8-11e8-bcf1-0a2705229b82', 'case_submitter_id': 'TCGA-A8-A08G'}

- Let's print out the dictionary:

label_map

Here's what the created dictionary looks like:

{'iTRAQ4 115': 'TCGA-C8-A12V',

'iTRAQ4 114': 'TCGA-AO-A0JM',

'iTRAQ4 117': 'Internal Reference',

'iTRAQ4 116': 'TCGA-A8-A08G'}

4. Query the TCGA GRCh38 dataset using the CGC Datasets API

The final section of the tutorial aims to show how you can connect proteomic data obtained from the PDC to genomics data from the TCGA GRCh38 dataset using the SubmitterId property.

- Set up the API URL and your authentication token. Learn how to get your authentication token.

# Query TCGA GRCh38 dataset on Datasets API

datasets_api_url = 'https://cgc-datasets-api.sbgenomics.com/datasets/'

# Link to Datasets API docs for obtaining AUTH TOKEN

token = 'MY_AUTH_TOKEN'

Please make sure to replace MY_AUTH_TOKEN with your authentication token obtained from the CGC.

- Set up the query to find cases for the submitter IDs in the TCGA GRCh38 dataset on the CGC, using the Datasets API:

query = {

"entity": "cases",

"hasSubmitterId": ["TCGA-C8-A12V", "TCGA-AO-A0JM", "TCGA-A8-A08G"]

}

headers = {"X-SBG-Auth-Token": token}

In this query, we are using Case Submitter IDs as the linking property to find the corresponding genomic data (Case UUIDs) for the obtained proteomic information from the PDC.

- Let's execute the query:

result = requests.post(datasets_api_url + 'tcga_grch38/v0/query', json.dumps(query), headers=headers)

- We will now parse and print Case UUID's from the result.

cases = []

for case in result.json()["_embedded"]["cases"]:

cases.append(case['label'])

print(cases)

This returns a list of three items:

['44bec761-b603-49c0-8634-f6bfe0319bb1', '719082cc-1ebe-4a51-a659-85a59db1d77d', '7e1673f8-5758-4963-8804-d5e39f06205b']

- Now, let's fetch and list all experimental strategies and data types available on the CGC for the TCGA GRCh38 dataset:

# List all experimental strategies and Data types

files_schema = requests.get(datasets_api_url + 'tcga_grch38/v0/files/schema', headers=headers)

# Experimental Strategy

print("Experimental strategy")

print(files_schema.json()['hasExperimentalStrategy'])

# DataType

print("\n")

print("DataType")

files_schema.json()['hasDataType']

- We will now create a query that will count how many Gene Expression Quantification files there are for the previously selected Case IDs and the RNA-Seq experimental strategy:

files_query = {

"entity": "files",

"hasCase": cases,

"hasExperimentalStrategy": "RNA-Seq",

"hasDataType": "Gene Expression Quantification",

"hasAccessLevel": "Open"

}

- Let's execute the query:

result_files_count = requests.post(datasets_api_url + 'tcga_grch38/v0/query/total', json.dumps(files_query), headers=headers)

- Let's print out the result:

result_files_count.text

If everything went well, you should get the following output:

'{"total": 9}'

- Now we'll fetch details for the nine files that match our query:

result_files = requests.post(datasets_api_url + 'tcga_grch38/v0/query', json.dumps(files_query), headers=headers)

And create lists containing file IDs and file names:

file_ids = []

file_names = []

for file in result_files.json()['_embedded']['files']:

file_names.append(file["label"])

file_ids.append(file["id"])

- Let's print out the list containing parsed file names:

file_names

You should get a list of all nine file names:

['54401c2a-7124-42b7-90d8-6267575bce51.FPKM-UQ.txt.gz',

'411e5567-82fd-4cc8-99ea-5ed9bd3a198e.FPKM.txt.gz',

'4d5b0ba8-64d8-404b-9a83-fc4111686afe.FPKM.txt.gz',

'54401c2a-7124-42b7-90d8-6267575bce51.htseq.counts.gz',

'411e5567-82fd-4cc8-99ea-5ed9bd3a198e.htseq.counts.gz',

'411e5567-82fd-4cc8-99ea-5ed9bd3a198e.FPKM-UQ.txt.gz',

'4d5b0ba8-64d8-404b-9a83-fc4111686afe.htseq.counts.gz',

'4d5b0ba8-64d8-404b-9a83-fc4111686afe.FPKM-UQ.txt.gz',

'54401c2a-7124-42b7-90d8-6267575bce51.FPKM.txt.gz']

- Finally, let's copy the files to a project on the CGC so they can be used for further analyses. We will start by importing the sevenbridges-python library that is available by default in Data Cruncher:

import sevenbridges as sbg

- Let's set up the needed parameters for the CGC API:

api = sbg.Api(url='https://cgc-api.sbgenomics.com/v2', token=token)

- Select the destination project on the CGC:

my_project_name = 'test'

# Find your project

my_project = [p for p in api.projects.query(limit=100).all() if p.name == my_project_name][0]

Please make sure to replace test with the actual name of your project on the CGC.

Let's confirm that we have selected an existing project:

my_project

This should return an output similar to:

<Project: id=rfranklin/test>

- We will now do the actual copying of files to the defined project:

for file in file_ids:

f = api.files.get(file)

f.copy(project=my_project)

And let's verify that the files are in the project:

my_files = api.files.query(limit = 100, project = my_project.id).all()

for file in my_files:

print(file.name)

If everything went well, the result should include the following files:

411e5567-82fd-4cc8-99ea-5ed9bd3a198e.FPKM-UQ.txt.gz

411e5567-82fd-4cc8-99ea-5ed9bd3a198e.FPKM.txt.gz

411e5567-82fd-4cc8-99ea-5ed9bd3a198e.htseq.counts.gz

4d5b0ba8-64d8-404b-9a83-fc4111686afe.FPKM-UQ.txt.gz

4d5b0ba8-64d8-404b-9a83-fc4111686afe.FPKM.txt.gz

4d5b0ba8-64d8-404b-9a83-fc4111686afe.htseq.counts.gz

54401c2a-7124-42b7-90d8-6267575bce51.FPKM-UQ.txt.gz

54401c2a-7124-42b7-90d8-6267575bce51.FPKM.txt.gz

54401c2a-7124-42b7-90d8-6267575bce51.htseq.counts.gz

If your project already contained some files, the returned list will also include those files along with the newly-copied ones.

The procedure above fetches and copies files that are classified as Open Data and are available for all CGC users. These are aggregated files and can be used for further analyses using Data Cruncher on the CGC. To obtain files containing Aligned Reads from the TCGA GRCh38 dataset, you will need to have access to Controlled Data through dbGaP. If the account you are using to log in to the CGC has access to Controlled Data, the query to get Aligned Reads files (step 6 above) should be:

files_query = {

"entity": "files",

"hasCase": cases,

"hasExperimentalStrategy": "RNA-Seq",

"hasDataType": "Aligned Reads",

"hasAccessLevel": "Controlled"

}

The rest of the procedure used to find and copy the files to your project on the CGC is the same as the one for Open Data described above.

Updated less than a minute ago