Cloud infrastructure pricing

Overview

CGC is a cloud-based environment that uses underlying cloud infrastructure to execute analyses, store and transfer data. This use of cloud resources generates costs that are charged by the cloud infrastructure provider and passed through by Seven Bridges. The costs are categorized as follows:

- Compute costs: When you run a task on the CGC, we use one or more compute instances from the CGC's underlying cloud infrastructure provider to process the data.

- Storage costs: When you add your own files to the CGC and put them in a project, they are housed in cloud storage.

- Data transfer costs: These costs are generated due to transferring data out from the cloud storage, e.g. when downloading files from the CGC.

All of these costs are billed on a monthly basis.

About compute costs

Compute costs depend on the cloud infrastructure provider. On CGC, there are two underlying cloud infastructure providers. Amazon Web Services (AWS) with its Elastic Compute Cloud (EC2) instances and Google Cloud Platform with its Google Compute Engine instances are used to perform computation tasks. The cloud provider that will be used depends on the selected project location (cloud provider and region) during project creation.

The following table shows the compute service and charging unit for AWS and GCP:

| Cloud service provider | Service name | Charging unit |

|---|---|---|

| Amazon Web Services (AWS US East) | EC2 | per Second |

| Amazon Web Services (AWS US East) | EBS | per Second |

| Google Cloud Platform | Google Compute Engine | per Second (minimum 1 minute + 1 second increments) |

| Google Cloud Platform | Persistent SSD disk | per Second |

Compute Costs: Amazon Web Services

If you are using an AWS-based location for your project, Amazon EC2 virtual computing environments, known as instances, are used to execute your analyses. There are various types of instances that have different configurations of CPU, memory, storage and networking capacity. See the list of AWS instances used on the CGC.

AWS charges for the use of their compute instances on a per second basis, but the rate depends on the AWS pricing model. The CGC uses two AWS pricing models:

- On-Demand: you pay for compute capacity at a fixed hourly rate.

- Spot: the hourly rate is dictated by the market (supply and demand) for AWS EC2 spare compute capacity.

All public workflows on the CGC are set up to use instances that offer the optimal ratio of price and compute power, while you are also able to perform optimization for your applications that you want to use on the CGC.

Amazon EC2 On-Demand instances

The rate of On-Demand instances depends on the instance type used and is shown in the following price list.

AWS expresses all rates per hour, but the calculation of the price is done on a per-second basis: the per-hour price is divided by 3600 and then multiplied by the actual number of seconds the instance was running.

Amazon EC2 Spot Instances

By default, the CGC uses instances priced using the Spot model.

When your run tasks using Spot Instances, you get charged the current market price for that instance for the time period the instance was running. AWS expresses all rates per hour, but the calculation of the price is done on a per-second basis: the per-hour price is divided by 3600 and then multiplied by the actual number of seconds the instance was running.

The maximum hourly price that you will be charged for an instance is the bid price of that instance. Because the bid price used by the CGC is the On-Demand price for that particular instance, you will never pay more than the On-Demand hourly rate for an instance you're using.

If the market price of a Spot Instance you're using exceeds the bid price (i.e. the On-Demand price for that instance type), the Spot Instance is terminated and the the task will continue running on an On-Demand instance(s). If spot instance termination occurs during the first hour of running the task on the instance, you will not be charged for using the spot instance. However, if the spot instance is terminated at any point after the first 60 minutes, you will be charged for the entire number of seconds the instance was running.

Amazon EBS

Amazon EBS volumes are storage volumes that can be attached to compute instances to provide additional space for file storage while files are being used in computation tasks. EBS volumes can be attached to the following types of instances:

- Instances that do not include any storage space (EBS-only instances), such as c4 and m4 instances. EBS storage is mandatory for these instances and you can define any size from 2 GB to 4096 GB depending on your task's storage requirements during computation.

- Instances that already include storage space, in order to increase the available storage on the computation instance. In this case, the instance storage is completely replaced by the attached EBS storage, up to the maximum of 4096 GB. The option to increase storage size for instances with their own storage is especially convenient for bioinformatics workflows as the files that are used as inputs for computation tasks and the files produced as results can be very large.

EBS is billed on a per second basis, while AWS expresses charges for EBS disk space in GB*hour/month. Please note that Seven Bridges passes through EBS storage costs with no extra charge or fee for accessing AWS services through the CGC.

Cost example

Learn more about how EBS is charged from the example below. Note that the example only serves as an illustration of how EBS costs are calculated. For current EBS prices, refer to the official Amazon EBS pricing chart. Make sure to select US East (N. Virginia) from the Region dropdown menu.

Running RNA-seq Alignment - TopHat on a c4.2xlarge instance with 1TB of EBS:

- c4.2xlarge = 8 CPUs, 15GB RAM (EBS Only) at $0.44 per Hour

- Additional 1TB of EBS disk space at ~$0.10 per GB*hour/month

Assuming that the workflow took 14 hours and 25 minutes (51900 seconds) to complete:

- c4.2xlarge x 14h25m = $6.34

- 1TB EBS x 14h25m = ($0.10 per GB*hour/month * 1024 GB * 51900 seconds) / (3600 seconds/hour * 24 hours/day * 30 days/month) = $2.05

Compute Costs: Google Cloud Platform

Google Compute Engine

If you selected a Google-based location for your project, the Google Cloud Platform (GCP) offers a range of compute instances that can be used to run computation tasks. You will be able to select from n1-standard, n1-highmem and n1-highcpu instances to run tasks on the the CGC. The full list of GCP instances that can be used on the CGC is available on this page.

The use of Google Compute Engine instances is charged on a per second basis. All instances are charged for a minimum of 1 minute. After the initial minute, instances are charged in 1 second increments.

Google SSD persistent disks

Google Cloud Platform instances come without any integrated storage, so they need to have defined attached storage. If you are running a task in a project whose location is set to a Google region, SSD persistent disks are used as attached storage for computation instances during execution of computation tasks. You are able to configure the attached storage size using the sbg:GoogleInstanceType hint. The size of attached storage can be from 2 GB to 4096 GB.

Cost example

Learn more about how Google SSD persistent disks are charged from the example below. Note that the example only serves as an illustration of how costs are calculated. For current and detailed information and pricing, please refer to the official GCP documentation on persistent disks.

Running RNA-seq Alignment - TopHat on an n1-standard-4 instance with 1TB of attached persistent SSD storage:

- n1-standard-4 = 4 CPUs, 15GB RAM at $0.19 per Hour

- Additional 1TB SSD persistent disk at $0.17 per GB-month

Assuming that the workflow took 14 hours and 25 minutes (51900 seconds) to complete:

- n1-standard-4 x 14h 25m = $2.74

- 1TB persistent SSD x 14h 25m = ($0.17 per GB*hour/month * 1024 GB * 51900 seconds) / (3600 seconds/hour * 24 hours/day * 30 day-month) = $3.48

Storage Costs

Storage is billed for all files that belong to your projects. This includes the files you upload to the CGC and files that are produced as results of analyses. If your project is in an AWS-based location, the storage service is Amazon Simple Storage Service (Amazon S3). If your project has been created in a Google-based location, your storage service is Google Cloud Storage (GCS).

| Cloud service provider | Service name | Charging unit |

|---|---|---|

| Amazon Web Services (AWS US East) | Amazon Simple Storage Service (S3) | per GB-Month |

| Google Cloud Platform | Google Cloud Storage | per GB-Month |

Please keep in mind that file storage is not charged twice for the same file. If a file is present in more than one of your projects that belong to one billing group, storage will be charged only once. When we calculate your monthly storage cost, we collect all files across all projects belonging to a single billing group, and then bill only once for each individual file within that billing group. A file is billed once regardless of which project it was uploaded to or how many times it was copied across any number of projects.

However, note the following scenarios in which we actually do bill for multiple appearances of a single file:

- When a file is copied to a project belonging to a different billing group: in this scenario, both billing groups are treated as "owning" a file, and both will pay for one copy. Again, each billing group may have multiple copies of that file in any number of projects, and will only pay once.

- When a file is re-uploaded: we do not detect for content equality, so if you upload the same file twice, the CGC sees (and bills for) two different files.

- When a file is archived and restored: restoring a file is considered as creating a temporary copy that can be worked on. While a file is restored, we bill both for keeping the archived copy and the one in active storage that can be used as input.

You are not charged for storing public files from our Public reference files repository or from our public datasets when you copy them into your project. However, you are responsible for the costs of any files derived from processing these public files. For instance, if you remap reads, you are charged for the storage of the resulting BAM files.

Sample storage cost calculation

Please note that the calculation is for information purposes only and is made according to the prices at the time of writing this page. To find out the current prices, visit the following pages depending on the selected project location:

Amazon S3. Make sure to select US East (N. Virginia) from the Region dropdown menu.

As shown in the table above, storage is charged on a per GB per month basis. This example shows how the total number of GB per month is calculated, given that the amount of data stored is likely to vary during one month.

Assume you store 100 GB (107,374,182,400 bytes) of data for 15 days in March, and 100 TB (109,951,162,777,600 bytes) of data for the final 16 days in March.

At the end of March, you would have the following usage in Byte-Hours: Total Byte-Hour usage= [107,374,182,400 bytes x 15 days x 24 hours per day] + [109,951,162,777,600 bytes x 16 days x 24 hours per day] = 42,259,901,212,262,400 Byte-Hours.

Let’s convert this to GB-Months: 42,259,901,212,262,400 Byte-Hours / 1,073,741,824 bytes per GB / 744 hours per month = 52,900 GB-Months

Based on the calculated number of GB-Months, we will do cost calculation for Amazon S3.

Sample Calculation: Amazon Web Services (Amazon S3)

Amazon S3 storage has three pricing tiers depending on the amount of data stored. This is a calculation of the monthly cost based on the amount of GB-Months calculated above:

- First 50 TB per month: 51,200 GB (50 x 1024) x $0.023 = $1,177.60

- Next 450 TB per month: 1,700 GB (remainder) x $0.022 = $37.40

- Over 500 TB per month: 0 GB = $0.00

Total Storage Cost = $1,177.60 + $37.40 + $0.00 = $1,215.00

Sample Calculation: Google Cloud Storage

This is the calculation for Google Cloud Storage:

- 52,900 GB x $0.026 = $1375.40

Total Storage Cost = $1375.40

Data transfer costs

The CGC relies on cloud infrastructure providers, for its data storage and computation capacities. When creating a project, you can select the project's location in terms of the cloud provider and region where all project resources are stored and execution is done. Data that is stored in projects on the CGC may be located in the same cloud provider's region, but can also be in different regions or even on different cloud providers, depending on the selected location for each of your projects. If your data needs to be transferred outside of the region where it is stored in order to be used in an analysis or downloaded to your local machine, this causes data transfer costs that are charged by the cloud infrastructure provider.

Data transfer costs can occur in the following situations:

These costs are charged by the underlying cloud provider and are passed through by Seven Bridges.

File transfer costs

File transfer costs are most commonly related to the use of files in executions on the CGC. When using files as inputs to a task or a Data Studio analysis and the files are stored in a region that is different from your project's location, there will be additional data transfer costs as the files are transferred from their original location (region) to the project's location where execution takes place. This can happen in the situations described below.

Using files copied between projects that are in different locations

When you copy a file between projects that are in different locations, the file will not be physically copied to the target project's location. Instead, it will be used from the location where it was originally uploaded to, as shown in the diagram below:

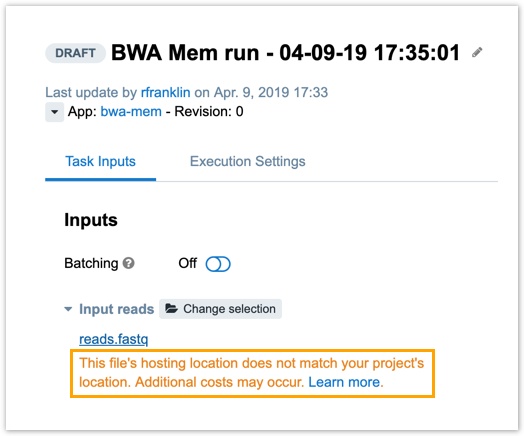

When you set such a file as an input in your task, a warning will be displayed below the input saying that the location of the file is different from the location of the project. This will cause additional costs as the file will need to leave the region or cloud provider where it is stored, to be brought to the computation instance where it will be processed.

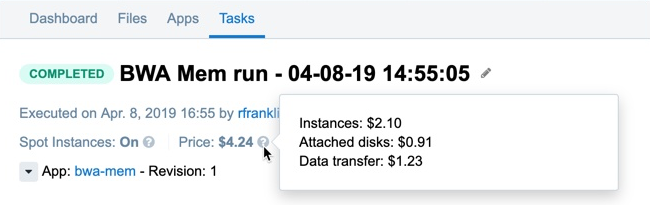

When a task containing such files is completed, the cost of data transfer is included in the total costs of task execution and will be charged together with the task price. Data transfer cost will be shown as a separate item in the task price tooltip on the task page.

Seven Bridges provides full transparency of data transfer costs charged by cloud infrastructure providers and passes them through with no extra charge or fee.

Using files from mounted AWS S3 or GCS buckets

Data transfer costs can be caused due to using files from mounted cloud storage buckets, if the bucket is in a location that is different from the location of the project in which you want to use the files. When executing an analysis within the project, the files will need to be transferred to the project's location as this is where the computation will take place.

Note that these costs are charged directly to your account with the cloud storage provider, not through the CGC. Also, when using such files as inputs for a task, the CGC will not display a warning about the location of the input file being different from the project location.

To optimize your task execution costs, when creating a project and planning the analyses that will be executed within that project, please keep in mind where your input files are stored and choose a project location that matches the location of your files, if possible. Also, please keep in mind that the location of a project cannot be changed once the project has been created.

File download costs

When downloading files to your local machine, viewing them on the CGC or exporting them to a volume that is in a different location from your project's location, this causes data download costs. Calculated costs are displayed under Downloaded in Current Usage and Spending Details sections on the Payments page and other billing documents (invoices) and summaries provided to you by Seven Bridges. Note that calculation and update of file transfer costs does not take place in real time, but in a fixed time interval several times throughout the day.

Downloading files that are stored in your project

Downloading files from the CGC generates file transfer costs. For example, if you download a total of 900 GB of files through the CGC's visual interface or the API in a month, the total monthly download cost will be 900 x $0.09 per GB = $81.00. The presented calculation is for information purposes only and is based on the assumption that you are using AWS as the underlying cloud provider and the current AWS pricing at the time of writing this document. For current AWS prices and price calculation, see the official AWS data transfer price list.

Viewing files on the CGC

File download costs will also include amounts that are charged for data transfer when previewing files or using the raw view, as the file content is downloaded to your local machine (web browser) in order to be displayed.

- Preview is available when viewing file details in your project or Data Studio analysis, for the following file formats

IPYNB,HTML,PDF,JPG,JPEG,SVG,PNG,GIF,MD. These previews are generated for files that are up to 10 MB in size, while preview is not available for larger files and is therefore not charged. - Raw view displays the raw file content directly in the browser, without any rendering based on the file format. When scrolling through a file in raw view, its content is loaded in 100 KB chunks, except for

txtfiles that are loaded in chunks of 10 MB. Unlike file preview, raw view doesn't have any limitations in terms of size of the viewed file. However, using raw view for binary file formats does not provide any useful information, so it should be avoided to prevent unnecessary data transfer costs.

Using SBFS

When CGC files are used through SBFS, this will also generate file transfer costs. SBFS makes CGC files available locally by downloading them to the local machine (or server instance) so they can be used for further analysis. Costs generated through SBFS will also be included in the Downloaded section in billing documents (invoices).

Exporting files to a volume that is in a different location

The CGC allows you export a file from a project to an attached volume using the API. If the volume is in a location (region) that is different from the location of your project, this also generates data transfer costs that are charged to your billing group and shown within the Downloaded item in billing documents (invoices).

Exceptions

Downloading files from public datasets

When downloading files from public datasets, there are no data transfer costs as datasets are made available free of charge, at no additional cost.

Updated less than a minute ago